Wget Curl

wget

wget is a GNU utility for retrieving files over the web using the popular internet transfer protocols (HTTP, HTTPS, FTP). It's useful either for obtaining individual files or mirroring entire web sites, as it can convert absolute links in downloaded documents to relative links. The GNU wget manual is the definitive resource.

Curl works more like the traditional Unix cat command, it sends more stuff to stdout, and reads more from stdin in a 'everything is a pipe' manner. Wget is more like cp, using the same analogue. Curl is basically made to do single-shot transfers of data. Also wget will work with tor, just a question of having tor proxy set up right on your server and digging for the additional commands. Jeff Huckaby Said, April 10, 2007 @ 7:21 pm. Verbosity can be good. Wget or curl with their respective “quiet” options will silence some output from those scripts but not all. Curl - Transfers data from or to a server, using one of the protocols: HTTP, HTTPS, FTP, FTPS, SCP, SFTP, TFTP, DICT, TELNET, LDAP or FILE. (To transfer multiple files use wget or FTP.) SYNOPSIS curl options URL. DESCRIPTION. Although cURL can retrieve files over the web like wget, it speaks many more protocols (HTTP/S, FTP/S, SCP, LDAP, IMAP, POP, SMTP, SMB, Telnet, etc.), and it can both send and reliably read and interpret server commands. CURL can send HTTP POST headers to interact with HTML forms and buttons, for example, and if it receives a 3xx HTTP response (moved), cURL can follow the resource to its new.

Wget curl; Wget is a simple tool designed to perform quick downloads: Curl on the other hand is a much more powerful tool. It can achieve a lot more as compared to Wget. Wget is command line only: Curl is powered by libcurl: Wget supports only HTTP, HTTPS, and FTP protocols.

Some Useful wget Switches and Options

Usage is wget option url1 url2

| Option | Purpose |

|---|---|

| -A, -R | Accept and reject lists; -A.jpg will download all .jpgs from remote directory |

| --backup-converted | When converting links in a file, back up the original version with .orig suffix; synonymous with -K |

| --backups=backups | Back up existing files with .1, .2, .3, etc. before overwriting them; the 'backups' directive specifies the maximum backups made for each file |

| -c | Continue an interrupted download |

| --convert links | Convert links in downloaded files to point to local files |

| -i file | Specify input file from which to read URLs |

| -l depth | Specify maximum recursion depth; default is 5 |

| -m | Shortcut for mirroring options: -r -N -l inf --no-remove-listing, i.e., turns on recursion and time-stamping, sets infinite recursion depth and keeps FTP directory listings |

| -N | Turns on timestamping |

| -O file | Specify the name of an output file, if you want it to be different than the downloaded file |

| -p | Download prerequisite files for displaying a web page (.css, .js, images, etc.) |

| -r | Download files recursively [RTFM here as it can get ugly fast] |

| -S | Print HTTP headers or FTP responses from remote servers |

| -T seconds | Set a timeout for an operation that takes too long |

| --user=user --password=password | Specify the username and/or password for HTTP/FTP logins |

Some wget Examples

Basic file download:

Download a file, rename it locally:

Download multiple files:

Download a file with your HTTP or FTP login/pass encoded:

Retrieve a single web page and all its support files (css, images, etc.) and change the links to reference the downloaded files:

Retrieve the first three levels of tldp.org, saving them to local directory tldp:

Create a five levels-deep mirror of TLDP, keeping its directory structure, re-pointing the links to local files, saving the activity log to tldplog:

Download all JPEGs from a from a given web directory, but not its child or parent directories:

Jab hale dil lyrics. Mirror a site in the specified local directory, converting links for local viewing, backing up the original HTML files locally as *.orig before rewriting the links:

cURL

cURL is a free software utility for transferring data over a network. Although cURL can retrieve files over the web like wget, it speaks many more protocols (HTTP/S, FTP/S, SCP, LDAP, IMAP, POP, SMTP, SMB, Telnet, etc.), and it can both send and reliably read and interpret server commands. cURL can send HTTP POST headers to interact with HTML forms and buttons, for example, and if it receives a 3xx HTTP response (moved), cURL can follow the resource to its new location. Keep in mind that cURL thinks in terms of data streams, not necessarily in terms of tidy, human-readable files. The cURL manpage is the definitive resource.

Some cURL Examples

Get the index.html file from a web site, or save index.html locally with a specified file name, or save the file locally using its remote name:

FTP -- get a particular file, or a directory listing:

Download a file from a web site that uses a redirect script, like Sourceforge (-L tells cURL to observe the Location header):

Specify a port number:

Specify a username and password:

Get a file over SSH (scp) using an RSA key for password-less login:

What Curling

Get a file from a Samba server:

Send an email using Gmail:

Promotions cellunlocker net. Get a file using an HTTP proxy that requires login:

Get the first or last 500 bytes of a file:

Upload a file to an FTP server:

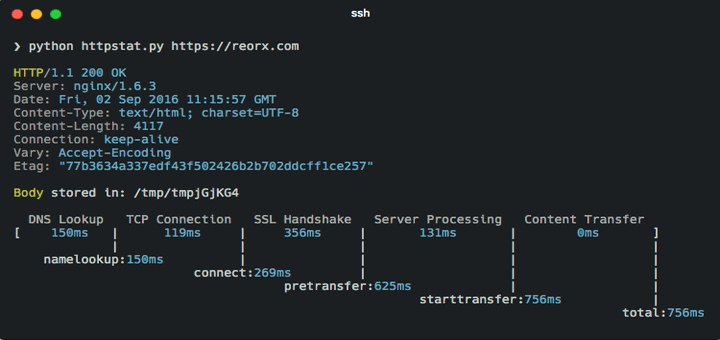

Show the HTTP headers returned by a web server, or save them to a file:

Send POST data to an HTTP server:

Wget Curl Command In Linux

Emulate a fill-in form, using local file myfile.txt as the source for the 'file' field:

Set a custom referrer or user agent:

Wget Curl

Categories:

I participate in the Amazon Associates program. Making your Amazon purchases through my affiliate links supports this site at no additional cost to you: