The Archive Browser

Another few thousand DOS Games are playable at the Internet Archive! Since our initial announcement in 2015, we’ve added occasional new games here and there to the collection, but this will be our biggest update yet, ranging from tiny recent independent productions to long-forgotten big-name releases from decades ago.

To browse the latest collection, hit this link and look around.

The usual caveats apply: Sometimes the emulations are slower than they should be, especially on older machines. Not all games are enjoyable to play. And of course, we are linking manuals where we can but not every game has a manual.

If you’ve been enjoying our “emulation in the browser” system over the years, then this is more of that. If you’re new to it or want to hear more about all this, keep reading.

This site uses cookies to allow access to content. Please review our privacy policy before viewing content on this site. You may also decline cookies, with reduced functionality. Back in December of last year, the Internet Archive (in their effort to backup the entire digital world, one bit at a time) launched a “Console Living Room” that offers up browser-friendly.

A Recognition of Hard Work, and A Breathtaking View

The update of these MS-DOS games comes from a project called eXoDOS, which has expanded over the years in the realm of collecting DOS games for easy playability on modern systems to tracking down and capturing, as best as can be done, the full context of DOS games – from the earliest simple games in the first couple years of the IBM PC to recently created independent productions that still work in the MS-DOS environment.

What makes the collection more than just a pile of old, now-playable games, is how it has to take head-on the problems of software preservation and history. Having an old executable and a scanned copy of the manual represents only the first few steps. DOS has remained consistent in some ways over the last (nearly) 40 years, but a lot has changed under the hood and programs were sometimes only written to work on very specific hardware and a very specific setup. They were released, sold some amount of copies, and then disappeared off the shelves, if not everyone’s memories.

It is all these extra steps, under the hood, of acquisition and configuration, that represents the hardest work by the eXoDOS project, and I recognize that long-time and Herculean effort. As a result, the eXoDOS project has over 7,000 titles they’ve made work dependably and consistently.

Separately from the eXoDOS project, I’ve been putting a percentage of these games into the Emularity system on the Internet Archive for research, entertainment and quick online access to the programs. The issues that are introduced by this are mine and mine alone, and eXoDOS is not able to help with them. You can always mail me at jscott@archive.org with questions or technical concerns.

This should be all that needs to be said, but since the Archive is doing things a little strangely, there’s a lot to keep in mind before you really dive in (or to realize, when you come back with questions).

That Hilarious Problem With CD-ROMs

The Internet Archive migrated its customized storage architecture to Sun Open Storage in 2009, and hosts a new data center in a Sun Modular Datacenter on Sun Microsystems' California campus. As of 2009 update, the Wayback Machine contained approximately three petabytes of data and was growing at a rate of 100 terabytes each month.

Putting these games into the Internet Archive has, over time, brought into sharp focus particular issues with browser-based emulation. For example, keyboard collision, where the input needs of the emulator are taken over by the browser itself, and the problems of a program needing a lot more horsepower to run in a browser emulator than a user’s system can handle.

Some of these have solutions that aren’t always great (Buy faster hardware!) and in some cases the problem is currently terminal (these programs have been taken offline for a future date). But the most obvious and pressing is that games based off CD-ROMs take a significant, huge amount of time to load.

CD-ROMs were a boon to the early-to-late 1990s, allowing games to have audio and video like never before. Depending on the tricks used, you got full-motion video (FMV), the playing of CD audio tracks for background music, and levels and variation of content for the games far beyond what floppy disks could ever hope.

But it was also a very large amount of data (up to 700 megabytes per CD) and it’s one thing to have the data sitting on a plastic disc in a local machine, and yet another to have a network connection pull the entire contents of the CD-ROM into memory and hold it there as a virtual file resources. This is going to be an enormous lean on the vast majority of Internet users out there – downloading multi-hundred-megabyte files into memory and then keeping them there, and then losing it all when the browser window closes. Network speeds will improve over time, but this is probably the biggest show-stopper of them all for many folks.

If you find yourself loading up one of these games and facing down a hundred-megabyte download, consider one of the smaller games instead, unless it’s a title you really, really want to try out. Maybe in a few years we’ll look back at cable-modem speeds and laugh at the crawling, but for now, they’re pretty significant.

Some Jewels in the Mix

Luckily, there are some smaller-sized games in this new update that will load relatively quickly and are really enjoyable to look at and to play. Here’s some of my recommendations:

First, a game special to me: the IBM DOS version of Adventure, calling itself “Microsoft Adventure”. It’s actually a small rebranding of the original start of the text adventure world, “Colossal Cave” or ADVENT, by Don Woods and Will Crowther. Remixed to be sold by IBM and Microsoft, this is how I first got into these, and it boots up instantly, providing hours of fun if you’ve never tried it before.

Mr. Blobby, a 1994 DOS Platform game, has all the hallmarks of the genre – bonkers physics, bright and lovely graphics, and joyful music. Be sure to redefine the keys before you try to play it, because besides running and jumping, you can spin and take things. The game does not get less weird as you go along.

Super Munchers: The Challenge Continues is a 1991 remix of the original educational game that sent your “muncher” gathering up words representing a given topic or idea. The speed of the game, along with the learning aspect, make this one of the more zesty “edutainment” titles available from the time.

Street Rod is a wonderfully compact 1989 racing game where it’s the 1960s and you’re going to buy your first hot-rod, tune it up, and race it for money to buy better and better rides. It’s a mouse-driven interface and loaded with all sorts of tricks to make the game fit into a “mere” 600 kilobytes compressed. Initially simple and then well worth the effort!

Digger from 1983 is a Dig-Dug-Clone-but-Not that came out right as IBM PCs were starting to take off, and it’s a lovely little game, steering around a mining machine while avoiding enemies and picking up diamonds. The most unintuitive thing is you need to fire using the “F1” key, so hopefully your keyboard has one.

I’m also going to suggest Floppy Frenzy from Windmill Software because it’s so much closer to the beginning of the IBM PC’s reign and you can see the difference in what the authors were comfortable with – the graphics are simpler, the game movement a little more rough, and the theme is geekiness incarnate: You’re a floppy disk avoiding magnets to leave traps for them, so you can gather the magnets up before the time runs out. If you don’t make it, an angel comes down and brings you to Floppy Disk Heaven. Again, F1 is the unusual key to leave traps.

There’s many more and I suggest people browse around and try things out, really soak in that MS-DOS joy. (And feel free to leave comments with suggestions.)

Thanks so much for coming along on this emulation journey!

- Jason Scott, Internet Archive Software Curator

ArchiveBox is a powerful, self-hosted internet archiving solution to collect, save, and view sites you want to preserve offline.

You can set it up as a command-line tool, web app, and desktop app (alpha), on Linux, macOS, and Windows.

You can feed it URLs one at a time, or schedule regular imports from browser bookmarks or history, feeds like RSS, bookmark services like Pocket/Pinboard, and more. See input formats for a full list.

It saves snapshots of the URLs you feed it in several formats: HTML, PDF, PNG screenshots, WARC, and more out-of-the-box, with a wide variety of content extracted and preserved automatically (article text, audio/video, git repos, etc.). See output formats for a full list.

The goal is to sleep soundly knowing the part of the internet you care about will be automatically preserved in durable, easily accessable formats for decades after it goes down.

Demo | Screenshots | Usage

. . . . . . . . . . . . . . . . . . . . . . . . . . . .

📦 Install ArchiveBox with Docker Compose (recommended) / Docker, or apt / brew / pip (see below).

No matter which setup method you choose, they all follow this basic process and provide the same CLI, Web UI, and on-disk data layout.

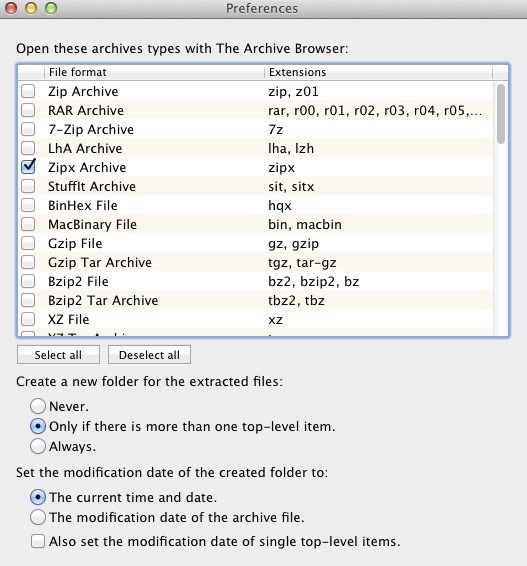

The Archive Browser Macpaw

- Once you’ve installed ArchiveBox, run this in a new empty folder to get started

- Add some URLs you want to archive

- Then view your archived pages

⤵️ See the Quickstart below for more…

Key Features

- Free & open source, doesn’t require signing up for anything, stores all data locally

- Powerful, intuitive command line interface with modular optional dependencies

- Comprehensive documentation, active development, and rich community

- Extracts a wide variety of content out-of-the-box: media (youtube-dl), articles (readability), code (git), etc.

- Supports scheduled/realtime importing from many types of sources

- Uses standard, durable, long-term formats like HTML, JSON, PDF, PNG, and WARC

- Usable as a oneshot CLI, self-hosted web UI, Python API (BETA), REST API (ALPHA), or desktop app (ALPHA)

- Saves all pages to archive.org as well by default for redundancy (can be disabled for local-only mode)

- Planned: support for archiving content requiring a login/paywall/cookies (working, but ill-advised until some pending fixes are released)

- Planned: support for running JS during archiving to adblock, autoscroll, modal-hide, thread-expand…

🖥 Supported OSs: Linux/BSD, macOS, Windows (Docker/WSL) 👾 CPUs: amd64, x86, arm8, arm7 (raspi>=3)

⬇️ Initial Setup

(click to expand your preferred ► distribution below for full setup instructions)

Get ArchiveBox with docker-compose on macOS/Linux/Windows ✨ (highly recommended)

First make sure you have Docker installed: https://docs.docker.com/get-docker/Download the [`docker-compose.yml`](https://raw.githubusercontent.com/ArchiveBox/ArchiveBox/master/docker-compose.yml) file.Start the server.Open [`http://127.0.0.1:8000`](http://127.0.0.1:8000).This is the recommended way to run ArchiveBox because it includes all the extractors like:chrome, wget, youtube-dl, git, etc., full-text search w/ sonic, and many other great features.

Get ArchiveBox with docker on macOS/Linux/Windows

First make sure you have Docker installed: https://docs.docker.com/get-docker/Get ArchiveBox with apt on Ubuntu/Debian

This method should work on all Ubuntu/Debian based systems, including x86, amd64, arm7, and arm8 CPUs (e.g. Raspberry Pis >=3).If you're on Ubuntu >= 20.04, add the `apt` repository with `add-apt-repository`:(on other Ubuntu/Debian-based systems follow the ♰ instructions below)♰ On other Ubuntu/Debian-based systems add these sources directly to /etc/apt/sources.list:(you may need to install some other dependencies manually however)Get ArchiveBox with brew on macOS

First make sure you have Homebrew installed: https://brew.sh/#installGet ArchiveBox with pip on any other platforms (some extras must be installed manually)

First make sure you have [Python >= v3.7](https://realpython.com/installing-python/) and [Node >= v12](https://nodejs.org/en/download/package-manager/) installed.⚡️ CLI Usage

archivebox setup/init/config/status/manageto administer your collectionarchivebox add/schedule/remove/update/list/shell/oneshotto manage Snapshots in the archivearchivebox scheduleto pull in fresh URLs in regularly from boorkmarks/history/Pocket/Pinboard/RSS/etc.

🖥 Web UI Usage

Then open http://127.0.0.1:8000 to view the UI.

🗄 SQL/Python/Filesystem Usage

DEMO:

https://demo.archivebox.ioUsage | Configuration | Caveats

Input formats

ArchiveBox supports many input formats for URLs, including Pocket & Pinboard exports, Browser bookmarks, Browser history, plain text, HTML, markdown, and more!

Click these links for instructions on how to propare your links from these sources:

- TXT, RSS, XML, JSON, CSV, SQL, HTML, Markdown, or any other text-based format…

- Browser history or browser bookmarks (see instructions for: Chrome, Firefox, Safari, IE, Opera, and more…)

- Pocket, Pinboard, Instapaper, Shaarli, Delicious, Reddit Saved, Wallabag, Unmark.it, OneTab, and more…

See the Usage: CLI page for documentation and examples.

It also includes a built-in scheduled import feature with archivebox schedule and browser bookmarklet, so you can pull in URLs from RSS feeds, websites, or the filesystem regularly/on-demand.

Archive Layout

All of ArchiveBox’s state (including the index, snapshot data, and config file) is stored in a single folder called the “ArchiveBox data folder”. All archivebox CLI commands must be run from inside this folder, and you first create it by running archivebox init.

The on-disk layout is optimized to be easy to browse by hand and durable long-term. The main index is a standard index.sqlite3 database in the root of the data folder (it can also be exported as static JSON/HTML), and the archive snapshots are organized by date-added timestamp in the ./archive/ subfolder.

Each snapshot subfolder ./archive/<timestamp>/ includes a static index.json and index.html describing its contents, and the snapshot extrator outputs are plain files within the folder.

Output formats

Inside each Snapshot folder, ArchiveBox save these different types of extractor outputs as plain files:

./archive/<timestamp>/*

- Index:

index.html&index.jsonHTML and JSON index files containing metadata and details - Title, Favicon, Headers Response headers, site favicon, and parsed site title

- SingleFile:

singlefile.htmlHTML snapshot rendered with headless Chrome using SingleFile - Wget Clone:

example.com/page-name.htmlwget clone of the site withwarc/<timestamp>.gz - Chrome Headless

- PDF:

output.pdfPrinted PDF of site using headless chrome - Screenshot:

screenshot.png1440x900 screenshot of site using headless chrome - DOM Dump:

output.htmlDOM Dump of the HTML after rendering using headless chrome

- PDF:

- Article Text:

article.html/jsonArticle text extraction using Readability & Mercury - Archive.org Permalink:

archive.org.txtA link to the saved site on archive.org - Audio & Video:

media/all audio/video files + playlists, including subtitles & metadata with youtube-dl - Source Code:

git/clone of any repository found on github, bitbucket, or gitlab links - More coming soon! See the Roadmap…

It does everything out-of-the-box by default, but you can disable or tweak individual archive methods via environment variables / config.

Static Archive Exporting

You can export the main index to browse it statically without needing to run a server.

Note about large exports: These exports are not paginated, exporting many URLs or the entire archive at once may be slow. Use the filtering CLI flags on the archivebox list command to export specific Snapshots or ranges.

The paths in the static exports are relative, make sure to keep them next to your ./archive folder when backing them up or viewing them.

Dependencies

For better security, easier updating, and to avoid polluting your host system with extra dependencies, it is strongly recommended to use the official Docker image with everything preinstalled for the best experience.

To achieve high fidelity archives in as many situations as possible, ArchiveBox depends on a variety of 3rd-party tools and libraries that specialize in extracting different types of content. These optional dependencies used for archiving sites include:

chromium/chrome(for screenshots, PDF, DOM HTML, and headless JS scripts)node&npm(for readability, mercury, and singlefile)wget(for plain HTML, static files, and WARC saving)curl(for fetching headers, favicon, and posting to Archive.org)youtube-dl(for audio, video, and subtitles)git(for cloning git repos)- and more as we grow…

You don’t need to install every dependency to use ArchiveBox. ArchiveBox will automatically disable extractors that rely on dependencies that aren’t installed, based on what is configured and available in your $PATH.

If using Docker, you don’t have to install any of these manually, all dependencies are set up properly out-of-the-box. Download uptown girl mp3.

However, if you prefer not using Docker, you can install ArchiveBox and its dependencies using your system package manager or pip directly on any Linux/macOS system. Just make sure to keep the dependencies up-to-date and check that ArchiveBox isn’t reporting any incompatibility with the versions you install.

Installing directly on Windows without Docker or WSL/WSL2/Cygwin is not officially supported, but some advanced users have reported getting it working.

Caveats

Archiving Private URLs

If you’re importing URLs containing secret slugs or pages with private content (e.g Google Docs, unlisted videos, etc), you may want to disable some of the extractor modules to avoid leaking private URLs to 3rd party APIs during the archiving process.

Security Risks of Viewing Archived JS

Be aware that malicious archived JS can access the contents of other pages in your archive when viewed. Because the Web UI serves all viewed snapshots from a single domain, they share a request context and typical CSRF/CORS/XSS/CSP protections do not work to prevent cross-site request attacks. See the Security Overview page for more details.

Saving Multiple Snapshots of a Single URL

Support for saving multiple snapshots of each site over time will be added eventually (along with the ability to view diffs of the changes between runs). For now ArchiveBox is designed to only archive each URL with each extractor type once. A workaround to take multiple snapshots of the same URL is to make them slightly different by adding a hash:

Storage Requirements

Because ArchiveBox is designed to ingest a firehose of browser history and bookmark feeds to a local disk, it can be much more disk-space intensive than a centralized service like the Internet Archive or Archive.today. However, as storage space gets cheaper and compression improves, you should be able to use it continuously over the years without having to delete anything.

Dj mix player software, free download for windows 7. ArchiveBox can use anywhere from ~1gb per 1000 articles, to ~50gb per 1000 articles, mostly dependent on whether you’re saving audio & video using SAVE_MEDIA=True and whether you lower MEDIA_MAX_SIZE=750mb.

Storage requirements can be reduced by using a compressed/deduplicated filesystem like ZFS/BTRFS, or by turning off extractors methods you don’t need. Don’t store large collections on older filesystems like EXT3/FAT as they may not be able to handle more than 50k directory entries in the archive/ Comfar 3.3 crack. folder.

Try to keep the index.sqlite3 file on local drive (not a network mount), and ideally on an SSD for maximum performance, however the archive/ folder can be on a network mount or spinning HDD.

Screenshots

The aim of ArchiveBox is to enable more of the internet to be archived by empowering people to self-host their own archives. The intent is for all the web content you care about to be viewable with common software in 50 - 100 years without needing to run ArchiveBox or other specialized software to replay it.

Vast treasure troves of knowledge are lost every day on the internet to link rot. As a society, we have an imperative to preserve some important parts of that treasure, just like we preserve our books, paintings, and music in physical libraries long after the originals go out of print or fade into obscurity.

Whether it’s to resist censorship by saving articles before they get taken down or edited, or just to save a collection of early 2010’s flash games you love to play, having the tools to archive internet content enables to you save the stuff you care most about before it disappears.

Image from WTF is Link Rot?..

The balance between the permanence and ephemeral nature of content on the internet is part of what makes it beautiful. I don’t think everything should be preserved in an automated fashion–making all content permanent and never removable, but I do think people should be able to decide for themselves and effectively archive specific content that they care about.

Because modern websites are complicated and often rely on dynamic content,ArchiveBox archives the sites in several different formats beyond what public archiving services like Archive.org/Archive.is save. Using multiple methods and the market-dominant browser to execute JS ensures we can save even the most complex, finicky websites in at least a few high-quality, long-term data formats.

Comparison to Other Projects

▶ Check out our community page for an index of web archiving initiatives and projects.

A variety of open and closed-source archiving projects exist, but few provide a nice UI and CLI to manage a large, high-fidelity archive collection over time.

ArchiveBox tries to be a robust, set-and-forget archiving solution suitable for archiving RSS feeds, bookmarks, or your entire browsing history (beware, it may be too big to store), including private/authenticated content that you wouldn’t otherwise share with a centralized service (this is not recommended due to JS replay security concerns).

Comparison With Centralized Public Archives

Not all content is suitable to be archived in a centralized collection, wehther because it’s private, copyrighted, too large, or too complex. ArchiveBox hopes to fill that gap.

By having each user store their own content locally, we can save much larger portions of everyone’s browsing history than a shared centralized service would be able to handle. The eventual goal is to work towards federated archiving where users can share portions of their collections with each other.

Comparison With Other Self-Hosted Archiving Options

ArchiveBox differentiates itself from similar self-hosted projects by providing both a comprehensive CLI interface for managing your archive, a Web UI that can be used either indepenently or together with the CLI, and a simple on-disk data format that can be used without either.

ArchiveBox is neither the highest fidelity, nor the simplest tool available for self-hosted archiving, rather it’s a jack-of-all-trades that tries to do most things well by default. It can be as simple or advanced as you want, and is designed to do everything out-of-the-box but be tuned to suit your needs.

If being able to archive very complex interactive pages with JS and video is paramount, check out ArchiveWeb.page and ReplayWeb.page.

If you prefer a simpler, leaner solution that archives page text in markdown and provides note-taking abilities, check out Archivy or 22120.

For more alternatives, see our list here…

Internet Archiving Ecosystem

Whether you want to learn which organizations are the big players in the web archiving space, want to find a specific open-source tool for your web archiving need, or just want to see where archivists hang out online, our Community Wiki page serves as an index of the broader web archiving community. Check it out to learn about some of the coolest web archiving projects and communities on the web!

- Community Wiki

- The Master Lists

Community-maintained indexes of archiving tools and institutions. - Web Archiving Software

Open source tools and projects in the internet archiving space. - Reading List

Articles, posts, and blogs relevant to ArchiveBox and web archiving in general. - Communities

A collection of the most active internet archiving communities and initiatives.

- The Master Lists

- Check out the ArchiveBox Roadmap and Changelog

- Learn why archiving the internet is important by reading the “On the Importance of Web Archiving” blog post.

- Reach out to me for questions and comments via @ArchiveBoxApp or @theSquashSH on Twitter

Need help building a custom archiving solution?

✨ Hire the team that helps build Archivebox to work on your project. (we’re @MonadicalSAS on Twitter)

(They also do general software consulting across many industries)

We use the Github wiki system and Read the Docs (WIP) for documentation.

You can also access the docs locally by looking in the ArchiveBox/docs/ folder.

Getting Started

Reference

- Python API (alpha)

- REST API (alpha)

More Info

All contributions to ArchiveBox are welcomed! Check our issues and Roadmap for things to work on, and please open an issue to discuss your proposed implementation before working on things! Otherwise we may have to close your PR if it doesn’t align with our roadmap.

Low hanging fruit / easy first tickets:

Setup the dev environment

Click to expand..

#### 1. Clone the main code repo (making sure to pull the submodules as well)```bashgit clone --recurse-submodules https://github.com/ArchiveBox/ArchiveBoxcd ArchiveBoxgit checkout dev # or the branch you want to testgit submodule update --init --recursivegit pull --recurse-submodules```#### 2. Option A: Install the Python, JS, and system dependencies directly on your machine```bash# Install ArchiveBox + python dependenciespython3 -m venv .venv && source .venv/bin/activate && pip install -e '.[dev]'# or: pipenv install --dev && pipenv shell# Install node dependenciesnpm install# orarchivebox setup# Check to see if anything is missingarchivebox --version# install any missing dependencies manually, or use the helper script:./bin/setup.sh```#### 2. Option B: Build the docker container and use that for development instead```bash# Optional: develop via docker by mounting the code dir into the container# if you edit e.g. ./archivebox/core/models.py on the docker host, runserver# inside the container will reload and pick up your changesdocker build . -t archiveboxdocker run -it archivebox init --setupdocker run -it -p 8000:8000 -v $PWD/data:/data -v $PWD/archivebox:/app/archivebox archivebox server 0.0.0.0:8000 --debug --reload# (remove the --reload flag and add the --nothreading flag when profiling with the django debug toolbar)```Common development tasks

See the ./bin/ folder and read the source of the bash scripts within.You can also run all these in Docker. For more examples see the Github Actions CI/CD tests that are run: .github/workflows/*.yaml.

Run in DEBUG mode

Click to expand..

```basharchivebox config --set DEBUG=True# orarchivebox server --debug ..```Build and run a Github branch

Click to expand..

```bashdocker build -t archivebox:dev https://github.com/ArchiveBox/ArchiveBox.git#devdocker run -it -v $PWD:/data archivebox:dev ..```Run the linters

Click to expand..

```bash./bin/lint.sh```(uses `flake8` and `mypy`)Internet Archive Advanced Search

Run the integration tests

Click to expand..

```bash./bin/test.sh```(uses `pytest -s`)Make migrations or enter a django shell

Click to expand..

Make sure to run this whenever you change things in `models.py`.```bashcd archivebox/./manage.py makemigrationscd path/to/test/data/archivebox shellarchivebox manage dbshell```(uses `pytest -s`)Build the docs, pip package, and docker image

Click to expand..

(Normally CI takes care of this, but these scripts can be run to do it manually)```bash./bin/build.sh# or individually:./bin/build_docs.sh./bin/build_pip.sh./bin/build_deb.sh./bin/build_brew.sh./bin/build_docker.sh```Roll a release

Click to expand..

(Normally CI takes care of this, but these scripts can be run to do it manually)```bash./bin/release.sh# or individually:./bin/release_docs.sh./bin/release_pip.sh./bin/release_deb.sh./bin/release_brew.sh./bin/release_docker.sh```Futher Reading

- Home: https://archivebox.io

- Demo: https://demo.archivebox.io

- Docs: https://docs.archivebox.io

- Wiki: https://wiki.archivebox.io

- Issues: https://issues.archivebox.io

- Forum: https://forum.archivebox.io

- Releases: https://releases.archivebox.io

- Donations: https://github.com/sponsors/pirate

This project is maintained mostly in my spare time with the help from generous contributors and Monadical (✨ hire them for dev work!).

Sponsor this project on Github

✨ Have spare CPU/disk/bandwidth and want to help the world? Check out our Good Karma Kit..